Dynamically Added Meta Data Indexed By Google Crawlers

Quick history. On May 23, 2014, the following announcement was made on the Google Webmaster Central Blog:

In order to solve this problem, we decided to try to understand pages by executing JavaScript. It's hard to do that at the scale of the current web, but we decided that it's worth it. We have been gradually improving how we do this for some time. In the past few months, our indexing system has been rendering a substantial number of web pages more like an average user's browser with JavaScript turned on.

Read the full announcement here.

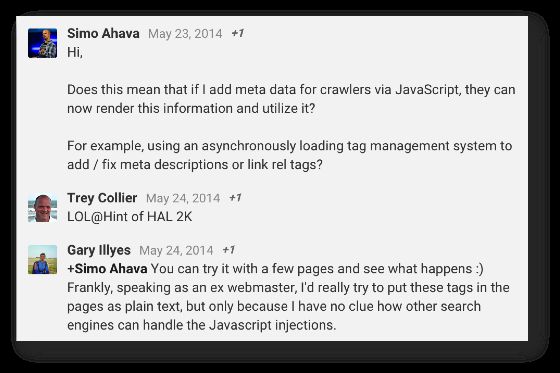

Anyway, since I see the world through GTM-tinted shades, I instantly figured that this should extend to not only the presentational layer, but to semantic information as well. At the time, I was thinking in terms of the Search Engine Results Page (SERP) and the Meta Description, which prompted me to ask the following in Google+ from Gary Illyes, who made the announcement:

As you can see, I didn’t get a direct answer, so I decided to run some tests. I created some test pages, and used Google Tag Manager to inject a Meta Description with a simple Custom HTML Tag (that fired on the test page only):

<script>

var m = document.createElement('meta');

m.name = 'description';

m.content = 'This tutorial has some helpful information for you, if you want to track how many hits come from browsers where JavaScript has been disabled.';

document.head.appendChild(m);

</script>This simple tag creates a new <meta name="description" content="This tutorial..."> tag and adds it as the last child of the head HTML element.

So I published the test pages, had Google crawl them and ended up with…failure. For some reason, it didn’t work and I left it be, evangelizing to people to keep on adding the Meta Data directly in the page template.

Then, at SuperWeek Hungary this January, I had the pleasure of meeting Gary Illyes in person, and I relayed to him my test results. To add some additional context, I had just tested successfully and written about injecting structured data JSON-LD through GTM. Anyway, Gary was adamant that the crawlers should understand injected meta tags, so I ran another series of tests.

Again, failure. But being your typical Finn, I refused to give up. So I ran three more tests.

XThe Simmer Newsletter

Subscribe to the Simmer newsletter to get the latest news and content from Simo Ahava into your email inbox!

Test 1: Pure Test Page

The first test I did was for a new page using my blog template, which had basically no content. Its title was “Test page” and the Meta Description was injected. After creating the page and setting up the tag in GTM, I published the page and asked Google to crawl the page via Webmaster Tools.

However, the SERP refused to show the injected Meta Description. After asking about it, I was told that sometimes the crawlers index a page before rendering the JavaScript, and that I should make a dramatic change to the page to force Google to recrawl it.

I added a lot of text and some images, but nothing changed so I abandoned the test as a failure.

Test 2: Real Content

The next test I ran was against real content. I had just written a blog post, and I decided to publish it with an injected Meta Description. This time, I was sure that the crawlers would render the Meta Description, as this was a real page with valuable content, and the Meta Description reflected this content very well.

This test was…a failure. This time, I was told that sometimes Google’s crawlers decide not to render the JavaScript version. The justification was that pages with heavy JavaScript would create an enormous load for Google’s crawlers to work with, which is why they might not render the JavaScript at all in favour of sparing resources.

Interesting reasoning. This means that the crawlers are not just going to take all the JavaScript you feed them with, but rather they still have an internal decision-making mechanism to determine whether or not to render the dynamic content.

Test 3: Real-like Content, Little JavaScript

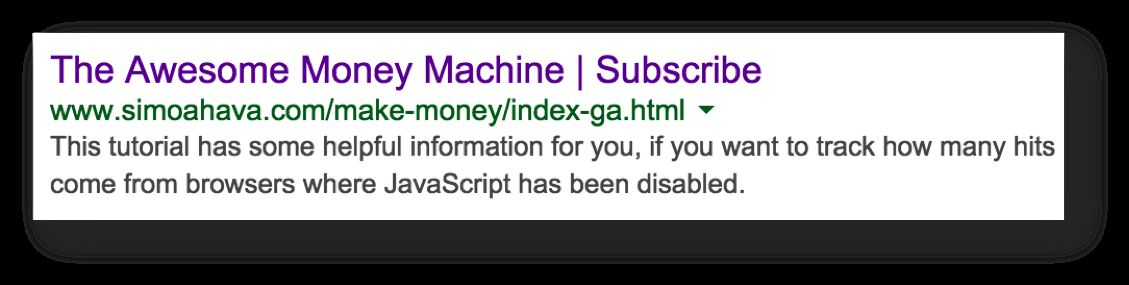

I created a dummy page with real content and an actual call-to-action:

As you can see, I’ve pulled all my CRO chops in creating this page. Do NOT comment on how it looks, it’s just a test!

Anyway, on this page I injected the Meta Description again, asked Google to crawl it and the test was…A SUCCESS:

The Meta Description in the SERP result above has been injected with Google Tag Manager. So it IS true:

Google’s crawlers index dynamically injected meta data as well.

Sorry if this was a no-brainer to you - but I had so many unsuccessful tests behind me that I wanted to be sure.

Implications

First of all, as you can probably see from my tests, this isn’t a 100 % sure method. So don’t delegate the creation and deployment of Meta tags to Google Tag Manager or any other dynamic, client-side solution. The best way is still to add Meta tags to the page template.

However, this does open up a world of possibilities for single-page apps, for example. Instead of using a complicated setup of hashbangs and server-side responses, it just might be possible to serve Meta tags purely with JavaScript in the future. Right now I don’t think it’s robust enough to trust your entire app logic with, but in the future, who knows.

The incredibly interesting and reassuring thing here is that Google’s crawlers are really taking dynamic web pages seriously. This is a huge step in building an actual representation of the web, instead of just crawling source code that might have very little to do with what visitors to your website actually experience and find relevant.